If you mean the database section in the config.yml, yes:

database:

# Database type to use. Supported values are mysql, postgres and sqlite. Vikunja is able to run with MySQL 8.0+, Mariadb 10.2+, PostgreSQL 12+, and sqlite.

type: "postgres"

# Database user which is used to connect to the database.

user: "dev"

# Database password

password: "some_unsecure_password"

# Database host

host: "localhost"

# Database to use

database: "devdb"

# When using sqlite, this is the path where to store the data

path: "./vikunja.db"

# Sets the max open connections to the database. Only used when using mysql and postgres.

maxopenconnections: 100

# Sets the maximum number of idle connections to the db.

maxidleconnections: 50

# The maximum lifetime of a single db connection in milliseconds.

maxconnectionlifetime: 10000

The database log looks as following:

2025-02-22T10:44:46.667296109Z: INFO ▶ [DATABASE] 004^[[0m [SQL] SELECT tablename FROM pg_tables WHERE schemaname = $1 [public] - 12.439974ms

2025-02-22T10:44:46.682627636Z: INFO ▶ [DATABASE] 005^[[0m [SQL] SELECT column_name, column_default, is_nullable, data_type, character_maximum_length, description,

CASE WHEN p.contype = 'p' THEN true ELSE false END AS primarykey,

CASE WHEN p.contype = 'u' THEN true ELSE false END AS uniquekey

FROM pg_attribute f

JOIN pg_class c ON c.oid = f.attrelid JOIN pg_type t ON t.oid = f.atttypid

LEFT JOIN pg_attrdef d ON d.adrelid = c.oid AND d.adnum = f.attnum

LEFT JOIN pg_description de ON f.attrelid=de.objoid AND f.attnum=de.objsubid

LEFT JOIN pg_namespace n ON n.oid = c.relnamespace

LEFT JOIN pg_constraint p ON p.conrelid = c.oid AND f.attnum = ANY (p.conkey)

LEFT JOIN pg_class AS g ON p.confrelid = g.oid

LEFT JOIN INFORMATION_SCHEMA.COLUMNS s ON s.column_name=f.attname AND c.relname=s.table_name

WHERE n.nspname= s.table_schema AND c.relkind = 'r' AND c.relname = $1 AND s.table_schema = $2 AND f.attnum > 0 ORDER BY f.attnum; [migration public] - 15.129377ms

2025-02-22T10:44:46.685035397Z: INFO ▶ [DATABASE] 006^[[0m [SQL] SELECT indexname, indexdef FROM pg_indexes WHERE tablename=$1 AND schemaname=$2 [migration public] - 2.235786ms

^[[33m2025-02-22T10:44:46.685107774Z: WARNING ▶ [DATABASE] 007^[[0m Table migration column id db type is TEXT, struct type is VARCHAR(255)

^[[33m2025-02-22T10:44:46.685128007Z: WARNING ▶ [DATABASE] 008^[[0m Table migration column description db type is TEXT, struct type is VARCHAR(255)

2025-02-22T10:44:46.685781644Z: INFO ▶ [DATABASE] 009^[[0m [SQL] SELECT count(*) FROM "migration" WHERE "id" IN ($1) [SCHEMA_INIT] - 580.417µs

2025-02-22T10:44:46.686153949Z: INFO ▶ [DATABASE] 00a^[[0m [SQL] SELECT count(*) FROM "migration" WHERE "id" IN ($1) [20190324205606] - 312.897µs

2025-02-22T10:44:46.686420181Z: INFO ▶ [DATABASE] 00b^[[0m [SQL] SELECT count(*) FROM "migration" WHERE "id" IN ($1) [20190328074430] - 222.499µs

2025-02-22T10:44:46.686653661Z: INFO ▶ [DATABASE] 00c^[[0m [SQL] SELECT count(*) FROM "migration" WHERE "id" IN ($1) [20190430111111] - 197.649µs

2025-02-22T10:44:46.68689575Z: INFO ▶ [DATABASE] 00d^[[0m [SQL] SELECT count(*) FROM "migration" WHERE "id" IN ($1) [20190511202210] - 202.442µs

2025-02-22T10:44:46.687188882Z: INFO ▶ [DATABASE] 00e^[[0m [SQL] SELECT count(*) FROM "migration" WHERE "id" IN ($1) [20190514192749] - 218.493µs

2025-02-22T10:44:46.687408343Z: INFO ▶ [DATABASE] 00f^[[0m [SQL] SELECT count(*) FROM "migration" WHERE "id" IN ($1) [20190524205441] - 186.34µs

(...)

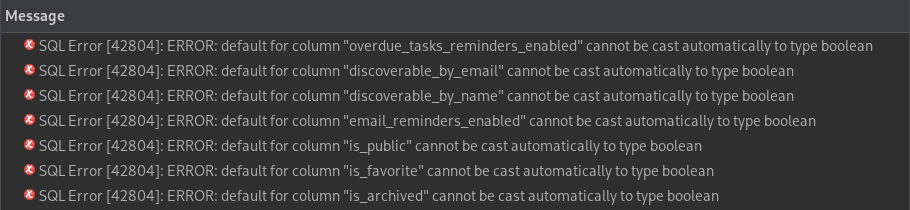

I believe it has something to do with WARNING lines, without want to play the captain obvious, but these are the only two WARNINGS I get in the log file. If needed, I can upload the complete log.

^[[33m2025-02-22T10:44:46.685107774Z: WARNING ▶ [DATABASE] 007^[[0m Table migration column id db type is TEXT, struct type is VARCHAR(255)

^[[33m2025-02-22T10:44:46.685128007Z: WARNING ▶ [DATABASE] 008^[[0m Table migration column description db type is TEXT, struct type is VARCHAR(255)

Also, I have noticed that the error message in the Vikunja Desktop app only appears when I click on Overview and Upcoming. Everything else does not throw an error message.

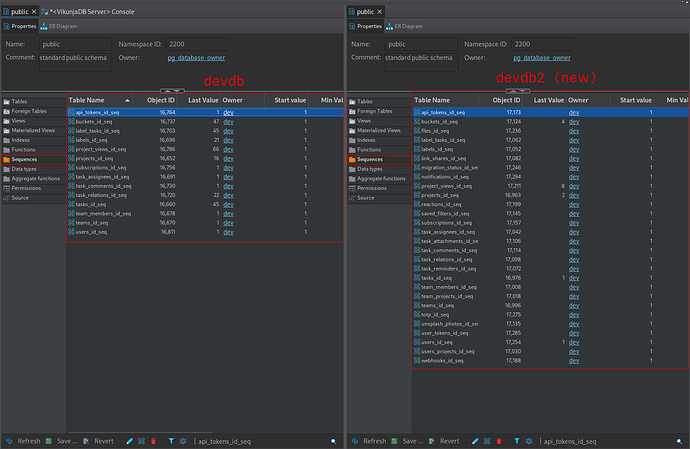

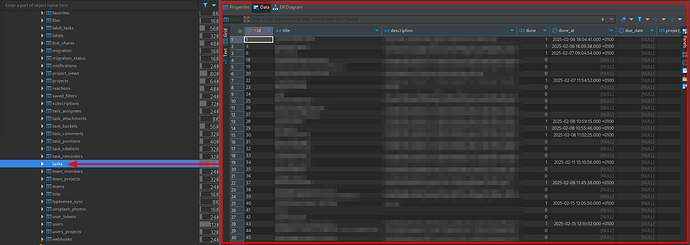

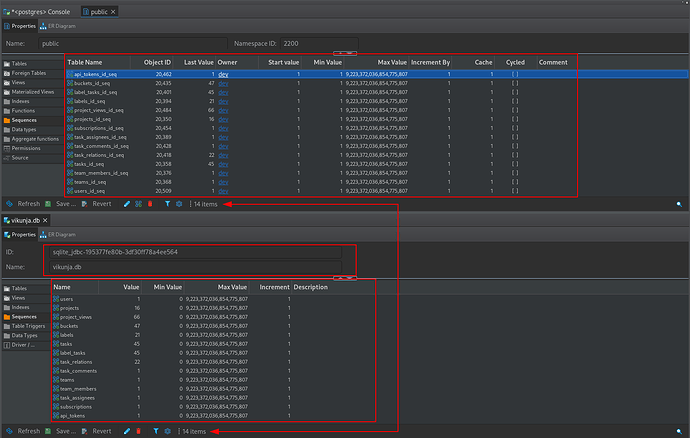

I checked the possible differences in the SQLite and PostgreSQL database or better say in the table migration but did not find any or at least none I’m aware of. But I checked through a GUI application called Antares SQL, so maybe the information is limited there.

On the stdout I got also this error here when I start vikunja via cli:

2025-02-22T11:05:20Z: WEB ▶ 192.168.200.1 GET 500 /api/v1/labels?page=1 31.932181ms - Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like

Gecko) vikunja-desktop/v0.24.3 Chrome/122.0.6261.156 Electron/29.4.6 Safari/537.36

(...)

2025-02-22T11:05:31Z: WEB ▶ 192.168.200.1 GET 500 /api/v1/labels?page=1 24.129382ms - Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like

Gecko) vikunja-desktop/v0.24.3 Chrome/122.0.6261.156 Electron/29.4.6 Safari/537.36

(...)

2025-02-22T11:05:33Z: WEB ▶ 192.168.200.1 GET 500 /api/v1/tasks/all?sort_by[]=due_date&sort_by[]=id&order_by[]=asc&order_by[]=desc&filter=done+%3D+fa

lse&filter_include_nulls=false&s=&filter_timezone=Europe%2FZurich&page=1 4.223774ms - Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko)

vikunja-desktop/v0.24.3 Chrome/122.0.6261.156 Electron/29.4.6 Safari/537.36

(...)

2025-02-22T11:05:33Z: WEB ▶ 192.168.200.1 GET 500 /api/v1/tasks/all?sort_by[]=due_date&sort_by[]=id&order_by[]=asc&order_by[]=desc&filter=done+%3D+fa

lse+%26%26+due_date+%3C+%272025-03-01T11:05:33.679Z%27+%26%26+due_date+%3E+%272025-02-22T11:05:33.679Z%27&filter_include_nulls=false&s=&filter_timezone=Euro

pe%2FZurich&page=1 3.707673ms - Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) vikunja-desktop/v0.24.3 Chrome/122.0.6261.156 Electro

n/29.4.6 Safari/537.36

Yes, I know but did not learn yet about Docker or more favorable Podman but created a task for it in vikunja.  Anyway, for such situation I have a development or test

Anyway, for such situation I have a development or test VM so no problem there with garbaging the system.

I hope I could summarize all important information, else you know what to do.

![]()

![]()